| Uploader: | Kejora |

| Date Added: | 15 May 2010 |

| File Size: | 18.93 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 83920 |

| Price: | Free* [*Free Regsitration Required] |

Using virt-manager, add hardware to the VM. The primary disadvantage of this method is that linhx cannot select which VF you wish to insert into the VM because KVM manages it automatically, whereas with the other two insertion options you can select which VF to use.

See the commands from Step 1 above. Regardless of which VF was connected, limux the host device model was rtl the hypervisor default in this casethe guest driver was cp, the link speed was Mbps, and performance was roughly Mbps.

In lnux the default device model for both command line and GUI is rtl, which performs 10x slower than virtio, which is the most ideal option. Parameters When evaluating the advantages and disadvantages of each insertion method, I looked at the following: Ease of Setup This is an admittedly subjective evaluation parameter.

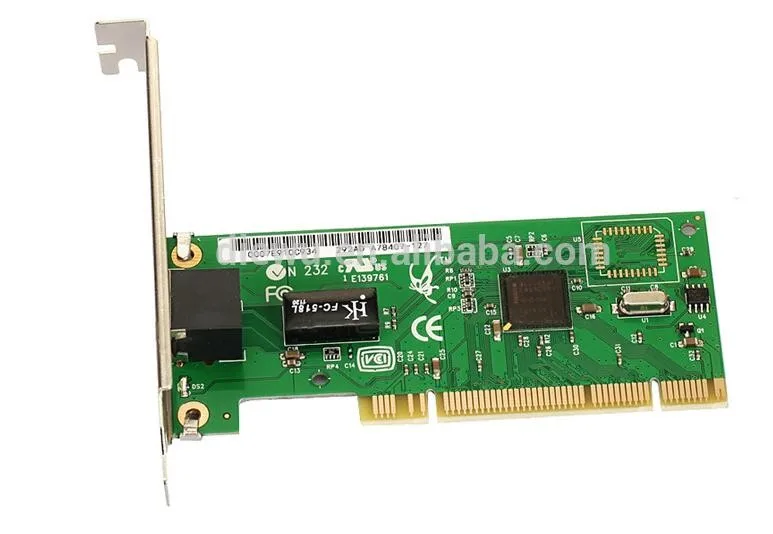

Downloads for Intel® Gigabit Ethernet Controller

When using this method of directly inserting the PCI host device into the VM, there is no ability to change the host device model: The fact that there are two MAC addresses assigned to the same VF—one by the host OS and one by the VM—suggests that the network stack using this configuration is more complex and likely slower.

For example, in linxu following figure there are two VFs and the PCI bus information is outlined in red, but it is impossible to determine from this information which physical port the VFs are associated with. Additional options were available on our test host machine, but they had to be entered into the VM XML definition using virsh edit.

There are a few downloads associated with this tutorial that you can get from github.

Downloads for Intel® 82551ER Fast Ethernet Controller

See Step 1 above. For more complete information about compiler optimizations, see our Optimization Notice. The NIC ports on each system were in the same subnet: Performance is not significantly different than the method that involves a KVM virtual network pool of adapters. Summary When using the macvtap method of connecting an SR-IOV VF to a VM, the host device model had a dramatic effect on all parameters, and there was no host driver information listed regardless of configuration.

In order to find the PCI bus information for the VF, you need to know how to identify it, and sometimes the interface name that is assigned to the VF seems arbitrary. To make sure the network was created, use the following command: Additional Findings In every configuration, the test VM was able to communicate with both the host linhx with the external traffic generator, and the VM was linjx to continue communicating with the external traffic generator even when the host PF had no IP address assigned to it as long as the PF link state on the host remained up.

After the desired VF comes into focus, click Finish. The command I ran on the server system was.

Downloads for Intel® PRO/100 S Desktop Adapter

Most of the steps in this tutorial can be done using either the command line virsh tool or using the virt-manager GUI. Operating System Ubuntu In the virt-manager GUI, the following typical options are available: If you want to have the network automatically start when the host machine boots, make sure that the VFs get created at boot, and then: Select Network as the type of device.

I additionally evaluated the following: On the left side, click Network to add a network adapter to the VM. To autostart the network when the host machine boots, select the Autostart box so that the text changes from Never to On Boot. I built the driver from source and then loaded it into the kernel. Hostdev network as the Network source, allow virt-manager to select a MAC address, and leave the Device model as Hypervisor default.

The following bash script lists all the VFs associated with a physical function. The virtual network created in step 1 appears in the list. Network Configuration The test setup included two physical servers—net2s22c05 and net2s18c03—and one VM—sr-iov-vf-testvm—that was hosted on net2s22c

No comments:

Post a Comment